Recognition of shared interests and the origins of a friendship

I came to know Doug Altman during the 1980s when we were both members of the editorial team at the British Journal of Obstetrics and Gynaecology. I was working at the National Perinatal Epidemiology Unit at that time; Doug was at the Division of Medical Statistics at the Medical Research Council’s Clinical Research Centre. Our meeting at the BJOG was the beginning of what became a very close friendship.

Doug and I shared an interest in trying to improve the quality of the manuscripts submitted to the BJOG. We commissioned three papers providing reporting guidelines for those submitting reports of controlled trials, assessments of screening and diagnostic tests, and observational studies – early examples of an interest that would become manifested in Doug’s creation of the EQUATOR Network (Enhancing the QUAlity and Transparency Of health Research).

We also discovered that we had both become interested in the scientific quality of reviews of research evidence, and the potential for statistical synthesis of estimates derived from several similar studies. I had used this approach in a review of four randomised comparisons of different ways of monitoring fetuses during labour (Chalmers 1979), the results of which prompted a very large further controlled trial which confirmed the results of the meta-analysis (MacDonald et al. 1985).

Doug’s interest in the scientific quality of reviews of research evidence had been stimulated by two papers published in the late 1970s by Richard Peto (Peto et al. 1977; Peto 1978). These led Doug to prepare a seven-page typescript entitled ‘Evaluating a series of clinical trials of the same treatment’ for presentation at the 1981 meeting of the International Epidemiological Association in Edinburgh (Altman 1981). Over the next two years Doug extended the material in the seven-page typescript to a 40-page typescript with the same title (Altman 1983).

Doug’s pioneering conceptualisation of systematic reviews and the role of meta-analysis

Doug’s 1983 paper is important in the history of systematic reviews because of his prescience of what is important in the science of research synthesis. Unfortunately, it has been hidden from view because it was never formally published. I think Doug first showed me “the almost final version of his 1983 paper (complete with handwritten corrections)” at the end of 1986. He said he intended to finalise and submit it for publication, but that did not happen. As he admitted more than two decades later, “I wish I had published my ideas back in 1983” (Altman 2015, reprinted in Egger et al. 2019). Since 2011, the typescripts of both papers (Altman 1981; 1983) have been available in the James Lind Library, and the shorter paper, with an accompanying commentary by Doug, is also available in the Cochrane Methods supplement to the Cochrane Database of Systematic Reviews (Altman 2013).

In both these papers Doug touched on issues that would become more widely recognised as important by the 1990s. In particular, he made clear that techniques of statistical synthesis – ‘meta-analysis’ – were but one element in a science of research synthesis, and usually not the most important. He made clear that, although statistical synthesis could address those elements of between-study variability due to random variation, it could not deal with other sources of variability – differences in entry criteria, study populations, the methods used to generate comparison groups, baseline differences between treatment groups, degrees of blindness achieved, and variations in and deviations from treatment protocols. Doug comments at the beginning of a nine-page section on ‘Combining the data’ in the longer paper that “Since the main purpose of the paper is to discuss the whole issue of whether or not to combine trials rather than to carry out a comparison of the available methods, not all of the possible statistical methods will be described” (Altman 1983, p 14). Both his papers stressed the likely importance of publication bias and he regretted the lack (then) of hard evidence of the bias and the challenges this posed. He makes the important and too often neglected point that:

Although the problem of possible publication bias may appear to be a major restriction on the validity of combining the results from several trials, it is important to realise that any such bias applies to the interpretation of individual studies, although this is always ignored and each study’s results taken at face value (Altman 1983, p 25).

Towards the end of his 1983 paper Doug presciently identified two desirable developments that would become widely appreciated by the end of the decade. First, the use of individual patient data:

In view of the non-statistical problems in the combination of results from different trials, the choice of statistical method is unlikely to matter greatly, but methods which make use of the raw data are definitely preferable to the combination of probabilities. The pooled estimate of relative risk should be presented with its confidence interval (Altman 1983, p 33).

Secondly, there is a paragraph in a section of the paper entitled ‘Ethical considerations’ which anticipates developments in thinking and practice during the 1980s and 1990s, and which Doug selected for attention after re-reading his paper over 30 years after drafting it (Altman 2013). Here’s the paragraph that had struck him:

[it] is important to consider whether the results of a series of studies of the same treatment should be accumulated on a regular basis in order to monitor the current state of knowledge about those treatments. Further trials might then be dependent on the combined significance of already completed trials but using a stricter level of statistical significance (say P<0.001) than is usually applied in single trials. Even without such information trials should perhaps not be given ethical committee approval unless the researchers had analysed the results of published trials in the way suggested in order to demonstrate that there was still uncertainty about the efficacy of the treatment, and the range of uncertainty encompassed clinically relevant benefit. Further, power calculations for a new trial could be conditional on the results of published trials (Altman 1983, p 27).

The origins of ‘Systematic reviews in Health Care: Meta-analysis in context’

Following wider recognition of the need to improve the scientific quality of reviews (Mulrow 1987; Jenicek 1987; Oxman and Guyatt 1988), the opening of the Cochrane Centre in Oxford in October 1992 helped to generate interest in the science of research synthesis (Chalmers et al. 1992). I was delighted that Richard Smith, editor of the British Medical Journal, recognised this and proposed an all-day meeting run jointly by the BMJ and The Cochrane Centre. I was very glad that he accepted that the title of the meeting would refer to Systematic Reviews, and not to Meta-analysis, as had been proposed originally. The meeting was held at the Royal Institution on 7 July 1993. Eight presentations covered the development of systematic reviews; doubts about them and the challenge of finding relevant studies; rationale and practicalities; and assessing, updating, and disseminating systematic reviews.

Based on the presentations made at the meeting, a series of articles about systematic reviews began in the 3 September 1994 issue of the BMJ. In his ‘Editor’s Choice’ column, Richard Smith noted that systematic review was “one of the most valuable tools in assessing new treatments and technologies” (Smith 1994a). He was even more supportive in his Editors’ Choice column a few weeks later:

“Systematic reviews provide the highest quality evidence on treatment…The author of a systematic review poses a clear question, gathers all relevant trials (whether published or not), weeds out the scientifically flawed, and then amalgamates the remaining trials to reach a conclusion. Every stage in the process is crucial, and an article in the journal by Kay Dickersin and her colleagues shows how a careful Medline search for randomised controlled trials will not detect all such trials even in the journals indexed in Medline” (Smith 1994b).

Richard Smith went on to point out that systematic reviews are also important because – by amalgamating data from similar trials – they can increase the statistical power of treatment comparisons (Smith 1994b). These succinct explanations of the rationale for systematic reviews made by the Editor-in-Chief of one of the world’s most prominent medical journals were heartening to those of us calling for improvements in the scientific quality of reviews of research.

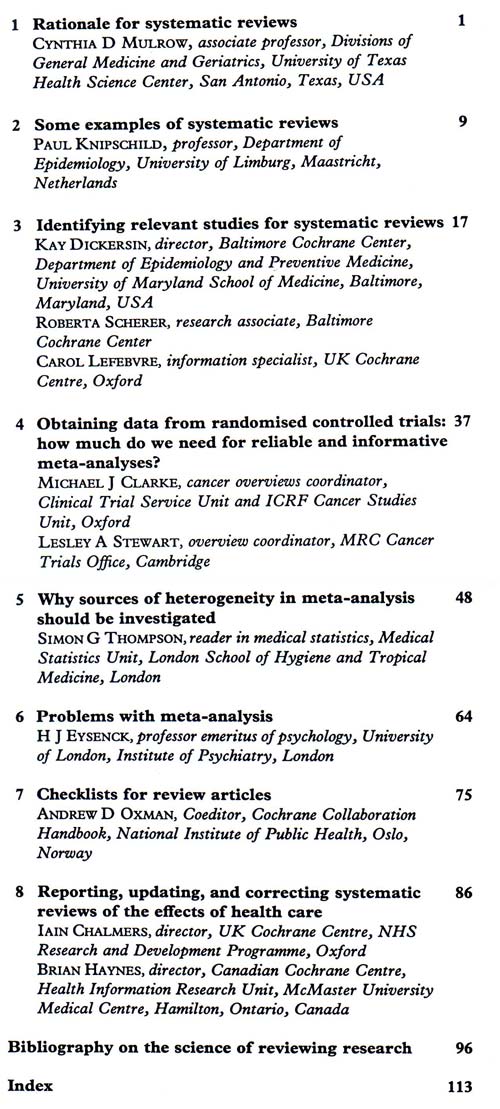

The BMJ’s series of articles on systematic reviews was well received and Richard Smith proposed that I should edit a compilation of the articles as a book. I accepted, on condition that Doug Altman would co-edit it with me, and I was very glad that both Richard and Doug agreed (Chalmers and Altman 1995). The contents and contributors to the book are shown in the Table and in the James Lind Library at https://www.jameslindlibrary.org/chalmers-i-altman-dg-1995/. The book introduces and illustrates systematic reviews; discusses data collection for them; presents contrary stances on the value of using meta-analysis to generate overall summary statistics; provides guidelines for assessing the trustworthiness of reviews; describes how systematic reviews are being prepared, updated and disseminated by the international network of people who together constitute the Cochrane Collaboration; and concludes with a classified bibliography for further reading. The book is dedicated to Thomas C Chalmers, “in appreciation of his many pioneering contributions to the science of reviewing health research, and in particular, for the first clear demonstration of the dangers of relying on traditional reviews of research to guide clinical practice.”

Doug’s and my Preface in the book provided an opportunity to explain why we had used the term ‘systematic review’ rather than the more technical neologism ‘meta-analysis’:

“A word about terminology: both the 1993 meeting and the book based on the proceedings have very deliberately used the term ‘systematic review’ rather than some of the alternatives which are in use. Use of the term ‘systematic review’ implies only that a review has been prepared using some kind of systematic approach to minimising biases and random errors, and that the components of the approach will be documented in a materials and methods section. Other terms – particularly ‘meta-analysis’ – have caused confusion because of the implication that a systematic approach to reviews must entail quantitative synthesis of primary data to yield an overall summary statistic (meta-analysis). As we hope this book will help to make clear, this is not the case. In addition to those circumstances in which statistical synthesis (meta-analysis) of results of primary research is not advisable, there will be others in which it is quite simply impossible. It is just as important to take steps to control biases in reviews in these circumstances as it is to do so in circumstances in which meta-analysis is both indicated and possible” (Chalmers and Altman 1995).

Doug reiterated this point in his 2013 commentary on ‘Twenty years of meta-analysis and evidence synthesis methods’. He wrote:

As time went on we have realized that there are many hidden problems, nuances, extensions, and so on. And there have been big changes in strategy. The biggest impact probably came from the early realization that the statistical analysis is a relatively simple part of a rather complex set of actions which we now label as a systematic review. (Altman 2013)

The issue was dealt with nicely in the title chosen for the second and third editions of the book, namely – ‘Systematic Reviews in Health Care: Meta-analysis in context’ (Egger et al. 2001). I am grateful to the editors of the third edition of the book (Egger, Davey Smith and Higgins) for inviting me to draw attention to the pioneering thinking and unpublished writing about research synthesis by their and my much loved, late lamented co-editorial colleague, Doug Altman.

Note

This article will be republished in Egger M, Davey Smith G, Higgins J (In Press). Systematic reviews in health care: Meta-analysis in context. 3rd ed. London: BMJ Books.

Acknowledgements

I am grateful to Mike Clarke, George Davey Smith, Anne Eisinga, Julian Higgins, and Richard Smith for comments on an earlier draft of this text.

This James Lind Library article has been republished in the Journal of the Royal Society of Medicine 2020;113:119-122. Print PDF

References

Altman DG (1981). Evaluating a series of clinical trials of the same treatment. Unpublished seven-page summary of the author’s presentation at a meeting of the International Epidemiological Association in Edinburgh, August 1981. Available from: jameslindlibrary.org/altman-dg-1981/

Altman DG (1983). Evaluating a series of clinical trials of the same treatment. Unpublished 40-page development of the author’s seven-page summary (Altman 1981) of his presentation at a meeting of the International Epidemiological Association in Edinburgh, August 1981. Available from: jameslindlibrary.org/altman-dg-1983/

Altman D (2013). Twenty years of meta-analysis and evidence synthesis methods: a personal reflection. Cochrane Methods. Cochrane DB Syst Rev 2013 Suppl 1: 2-11. Available from: https://methods.cochrane.org/sites/default/files/public/uploads/2013_

cochrane_methods_editorial_doug_altman.pdf

Altman DG (2015). Some reflections on the evolution of meta-analysis. Research Synthesis Methods 6:265-267.

Chalmers I (1979). Randomised controlled trials of fetal monitoring 1973-1977. In: Thalhammer O, Baumgarten K, Pollak A (eds), Perinatal Medicine pp 260-265. Stuttgart: George Thieme.

Chalmers I, Altman DG (1995). Systematic reviews. London: BMJ Books.

Chalmers I, Dickersin K, Chalmers TC (1992). Getting to grips with Archie Cochrane’s agenda: All randomised controlled trials should be registered and reported. BMJ 305:786-788.

Egger M, Davey Smith G, Altman DG (2001). Systematic reviews in health care: Meta-analysis in context. 2nd ed. London: BMJ Books.

Jenicek M (1987). Méta-analyse en médecine. Évaluation et synthèse de l’information clinique et épidémiologique. [Meta-analysis in medicine: evaluation and synthesis of clinical and epidemiological information] St. Hyacinthe and Paris: EDISEM and Maloine Éditeurs.

MacDonald D, Grant A, Sheridan-Pereira M, Boylan P, Chalmers I (1985). The Dublin randomized controlled trial of intrapartum fetal heart rate monitoring. American Journal of Obstetrics and Gynecology 152:524-539.

Mulrow CD (1987). The medical review article: state of the science. Annals of Internal Medicine 106:485-488.

Oxman AD, Guyatt GH (1988). Guidelines for reading literature reviews. Canadian Medical Association Journal 138:697-703.

Peto R, Pike MC, Armitage P, Breslow NE, Cox DR, Howard SV, Mantel N, McPherson K, Peto J, Smith PG (1977). Design and analysis of randomized clinical trials requiring prolonged observation of each patient. II. Analysis and examples. British Journal of Cancer 35:1-39.

Peto R (1978). Clinical trial methodology. Biomedicine 28 (special issue):24-36

Smith R (1994a). Hearts and minds. BMJ 309: 3 September.

Smith R (1994b). Systematic reviews, stupid doctors, and red meat. BMJ 309: 12 November.